2025-09-02

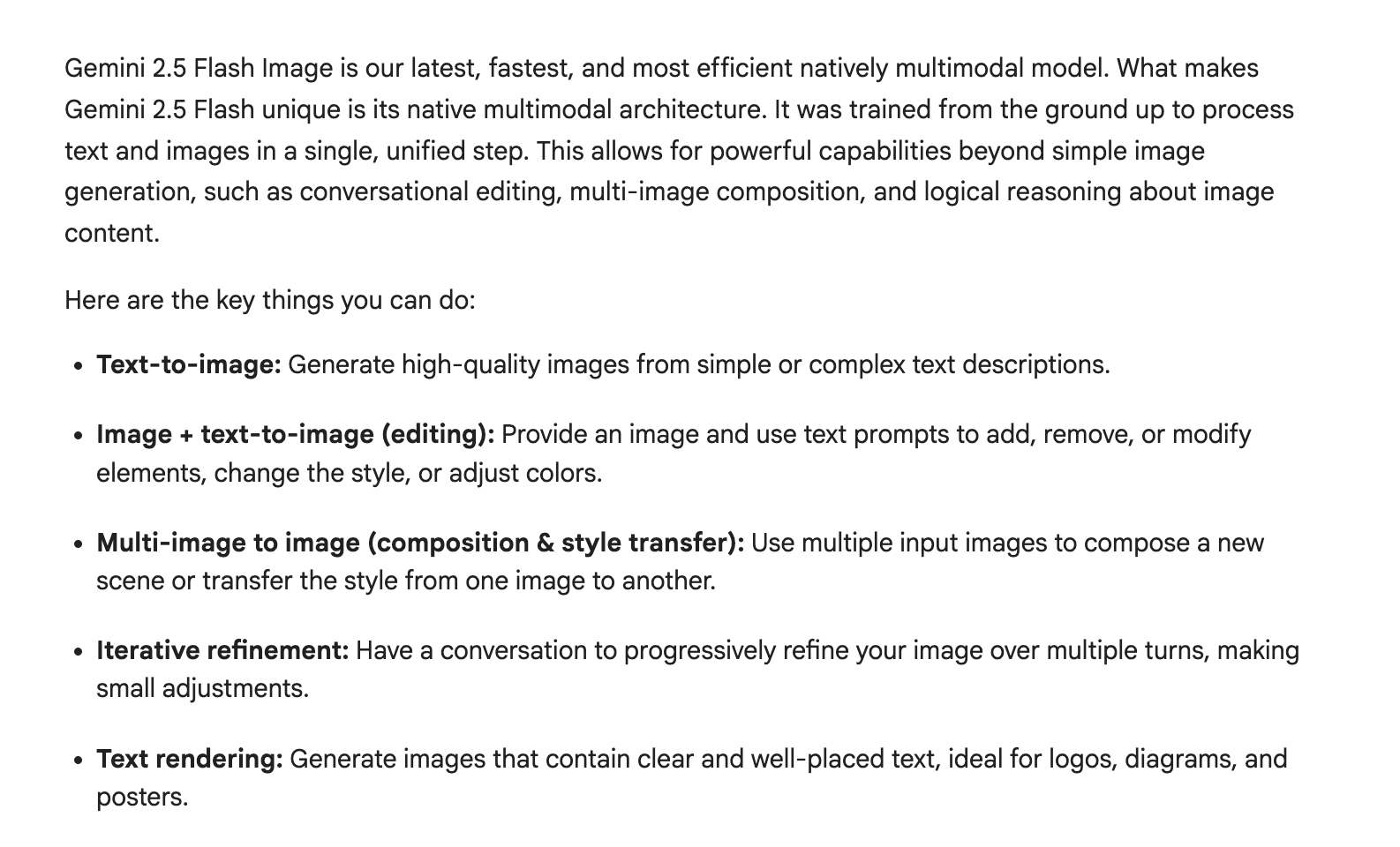

Today we explore how vision models mirror biological brains, how diffusion LMs internalize answers before decoding, and how agent frameworks structure multi-stage reasoning. Plus, Google’s “Nano Banana” — the Gemini 2.5 Flash Image upgrade — shows how interfaces are becoming cognitive tools themselves, enabling prompt-based image editing with uncanny consistency between characters and objects .

Top picks from aigc.news:

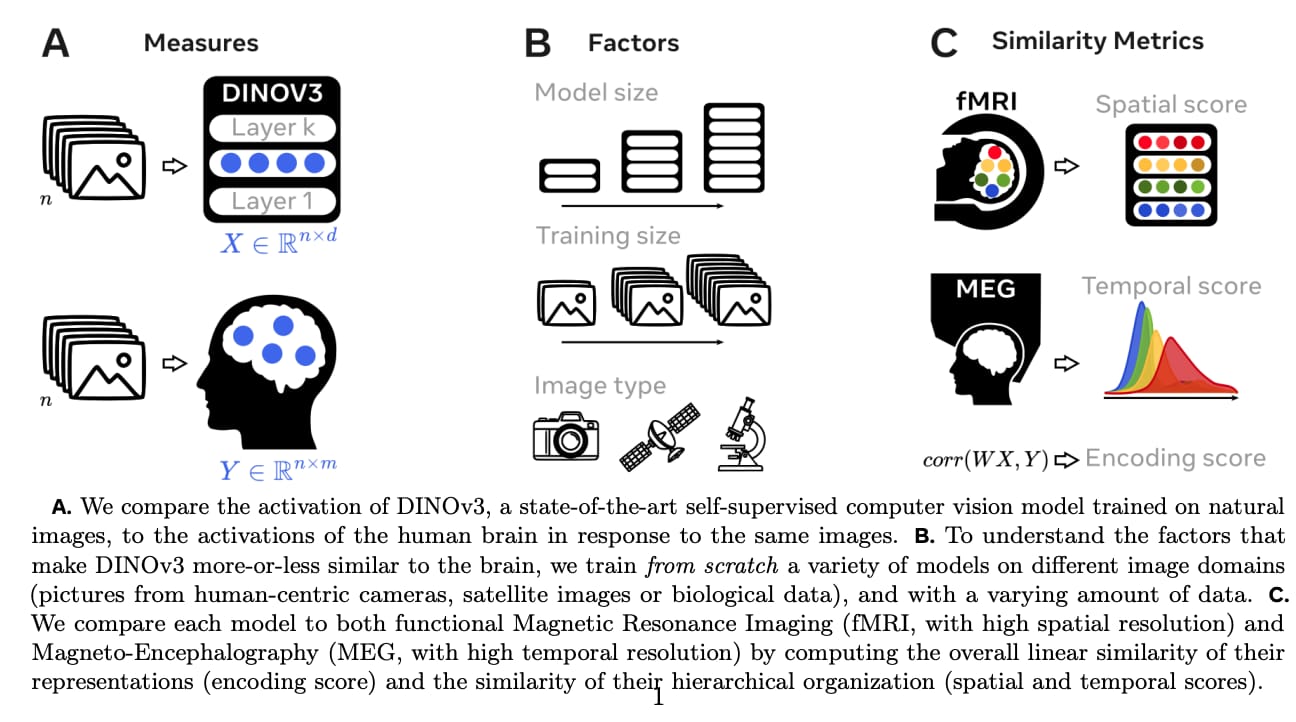

🧠 Convergent Brains – Vision models mirror biological features of primate visual cortex

💭 DLMs Think First – Diffusion LMs internalize answers before decoding

🍌 Nano Banana Best Practices – Prompting insights for Gemini’s multi-step, consistency-focused image editor

Explore more papers, tools, and signals — updated daily at aigc.news

📚 AIGC PAPERS

AIGC Papers

Today’s AIGC Papers

From convergent patterns between brains and vision models, to how Diffusion LMs “think” before decoding, and a new blueprint for multi-phase agent reasoning — today’s picks dig deep into AI cognition and control.

Convergent Brains – Vision models mirror biological features

DLMs Think First – Diffusion LMs internalize answers before decoding

rStar2-Agent – A blueprint for multi-phase agentic reasoning

Explore the code, read the insights.

🛠️AIGC Projects

AIGC Projects

Today’s AIGC Projects

From translation-grade bilingual LLMs to LLM-based game agents and quantized image editing tools — today’s projects open new doors for building faster and smarter.

Hunyuan-MT-7B – Tencent's open-sourced bilingual LLM for translation tasks

HunyuanWorld-Voyager – A multi-agent, LLM-based game exploration benchmark

Qwen-Image-Edit-GGUF – Quantized version of Qwen’s image editing model

Build faster with open tools.

tencent/Hunyuan-MT-7B( link )

HunyuanWorld-Voyager (link )

QuantStack/Qwen-Image-Edit-GGUF( link )

🗞️ AIGC News

AIGC News

Today’s AIGC News

From Google's official tips for image generation to the architectural secrets behind vLLM’s blazing speed — today’s updates are must-reads for prompt engineers and infra devs alike.

Gemini 2.5 Flash – Best practices for fast image generation prompting

Inside vLLM – Dissecting the architecture behind lightning-fast LLM inference

Stay sharp with the latest breakthroughs.

⭐️⭐️⭐️⭐️ How to prompt Gemini 2.5 Flash Image Generation for the best results ( link )

Inside vLLM: Anatomy of a High-Throughput LLM Inference System ( link )

Always fresh, always live

Real-time AIGC tracker

New papers and projects updated by the minute — stay ahead of the AI curve.

That’s it for today.

Keep showing up, keep cheering each other on — and as always, run happy! 🏃♂️💛

The aigc.news Team